There comes a time in every programmers’ life when they take a webcam and have the idea to programatically save the stuff it’s filming for hilarious purposes. Usually such purposes are best suited by some (semi)interpreted language that offers you a very high level API which abstracts away the low level details of begging the OS for a buffer with some pixel values in it, but sometimes you don’t have the luxury of assuming that whoever is going to use your stuff has the latest and greatest in terms of raw computing power so you have to go down a few levels until you reach the inhospitable land of platform dependent APIs.

To be more specific I’m talking about DirectShow here of course, other platforms will probably soon follow as I go through them, but for now let’s focus on this. I’m pretty sure that at some point during the development of windows, programmers sent angry complain letters about how they write too little code to make things work and how their bosses always think they do nothing because of that. And that is how COM was born.

The idea around COM isn’t all that bad though, with C++ not having an ABI and all, this is the next best thing, you query an interface you know that the calling convention is stdcall, strings are always wide char unicode, you have the refcount and a separate COM heap to stop worrying about incompatibilities between runtimes if somehow you ended up deleting an object someone else gave you, all in all a very good framework in theory. Practice however teaches us that in this case abstraction lead to more abstraction and it’s pretty easy to lose yourself in the layers.

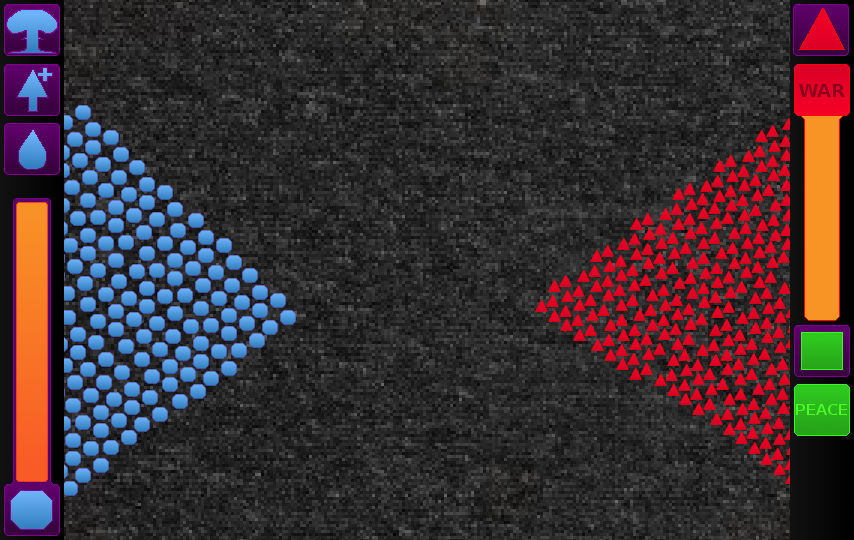

So back to our goal, the first thing you need to know about DirectShow is that it has a display graph, the graph is made out of tiny black boxes called filters. Each filter has one or more input pins and/or one or more output pins that you can connect to each other. So the first thing you need to do is get a filter that will connect to a device and output the video. That’s pretty easy, you just call BindToObject and you’re mostly done.

The next step is the tedious one, first you have to implement a IBaseFilter, keep a FILTER_INFO structure in your class and fill it in JoinFilterGraph with the arguments you receive, the rest of it should be straightforward. Unfortunately for the filter to actually work you have to implement everything here, but most of the functions are 1-2 lines of code.

After you’re done with the filter, you have to start with the pins. I used a pin for audio and a pin for video because I don’t really like to mix functionality, it’s your choice how you go about it, but in any case, to be able to save video/audio data, you have to implement the IPin and IMemInputPin, now since neither of them were declared with virtual inheritance the easiest way is to use aggregation and have a pointer to each other in the implementation (IPin has a pointer to IMemInputPin and IMemInputPin to IPin). I used a normal pointer as a weak reference for the IPin in the IMemInputPin and a CComPtr in the IPin so that I don’t have to worry about the ref count.

The good part is that for the purpose of capturing stream data, you don’t actually need to seriously implement everything in the interface, you can forget about the flush methods, just return S_OK or something, EndOfStream, Connect and NewSegment are insignificant as well, QueryAccept should be implemented to accept everything, you’re far better off controlling the data type by querying the camera. For the IMemInputPin, tou can forget about the allocators. In the ReceiveCanBlock you need to return false, and the receive part is where you do your actual saving. The only thing you need to watch out for is that the IMediaSample you receive sometimes returns S_FALSE when you ask about the media type, it’s their way of saying that you need to use the last known media type, which is most likely the one you received when you first connected the pin.

Fortunately after all this is over and you realised you just spent two days of your life implementing interfaces that you aren’t sure will work, the graph system we learned about earlier will figure out by itself what comes where when you call the RenderStream function, you just need to call AddFilter for the filters we implemented earlier. If you’re lucky and you considered everything it will just work and you should see some rgb data saved to disk (I recommend the ppm format for rgb stills and y4m for yuv movies). If not then the first thing you need to do is see if every component is being initialized, if the ReceiveConnection is called in IPin, if the Receive function is called in IMemInputPin and so forth.

There’s also the problem of having to make the enumerating interfaces for the filter pins and the pin media types. If your goal is to only capture video / audio you can make a filter for audio capture and a filter for video capture and have both of them contain a single input pin that will save the streams and you can have the enumeration class just return a pointer to that pin which will very much simplify the implementation. For the media type enumeration, return a AM_MEDIA_TYPE with a null pbformat and a GUID_NULL for every guid in there, this way you tell DirectShow that you don’t really care about the format of the pin that connects to yours, so you avoid some other problems later on, if you need to choose a format you can use the IAMStreamConfig interface on the filter you get from the bind to select a configuration that suits your needs.

Oh and if you need to allocate or free memory always use CoMemTaskAlloc and CoMemTaskFree.

Conclusions? It will take you around 1000+ lines of code and 2 days to move some YUV and WAV data from memory into a file.